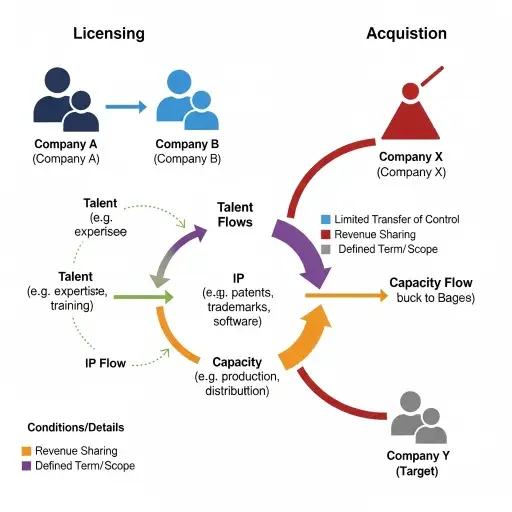

Every licensing announcement arrives as a small event: a PDF, a press release, a PR shorthand that turns technical nuance into executive bullet points. NVIDIA’s recent licensing of Groq assets should not be filed under routine corporate housekeeping. Read the clauses and the optics together and you get something else — a deliberate acqui‑hire by proxy that accelerates inference capacity while folding personnel, compiler pathways and operational know‑how into NVIDIA’s platform strategy.

Start with the binding constraint: platform. For enterprises buying AI services, the hard limit isn’t only the silicon on order; it’s the integration stack — runtimes, compilers, driver support and the team that can make a new accelerator handle real‑world models and SLAs. Groq offered a tight stack optimized for low‑latency inference. By licensing Groq, NVIDIA hasn’t merely acquired IP; it has contracted for a set of human‑scale primitives: code paths, optimization heuristics and the institutional memory required to turn silicon into predictable capacity.

That matters because procurement today is re‑priced around delivered outcomes, not die size. An enterprise doesn’t want 8,000 GPU cores; it wants a predictable 99th‑percentile latency at 10,000 QPS for its recommender model. If NVIDIA can internalize Groq’s fast‑path inference tricks, it can sell inference capacity that solves latency, determinism and cost predictability in one packaged SKU — and that alters how CFOs and infrastructure teams budget.

Practically, three shifts will ripple through chip cycles and enterprise budgets.

First: the unit economics of inference change. Historically, chip cycles are a fungible accounting line item — buys of GPUs now, potential savings later. Platform‑level optimizations reduce cycles-per-inference. But when those optimizations are delivered as part of a vendor‑controlled stack, the buyer’s decision becomes a service trade-off: pay more for vendor‑delivered efficient cycles, or invest in in‑house ops and tooling. The former consolidates spend with platform providers; the latter requires scale, engineering time and continued tooling risk.

Second: procurement rhythms compress. Chip cycles move on a cadence — product generations, fab schedules, capacity forecasts. If NVIDIA can package Groq‑derived capabilities into existing product lines or cloud‑offered instances, customers can consume inference capacity on monthly or consumption billing, smoothing the spikes that once drove large capex purchases. That undermines the blockbuster refresh cycle and favors continuous subscription economics.

Third: competitive responses will be stratified, not symmetric. Rival silicon vendors can match raw performance, but Groq’s value resides in its entire stack and the people who tuned it. For challengers, the choice is stark: replicate the integration work (costly and time‑consuming), pursue their own acqui‑hire equivalents, or compete purely on price and hope enterprises accept higher integration risk. Expect to see three plays: (1) more licensing/partnership deals that mimic NVIDIA’s approach; (2) focused acqui‑hires; and (3) cloud incumbents internalizing optimized inference stacks as proprietary differentiation.

The acqui‑hire framing also reframes M&A signaling. An outright acquisition would have signaled full transfer of talent and culture — and a larger balance‑sheet hit. A licensing agreement is softer: it transfers IP and operational patterns without the integration headaches regulators and boards dread. For NVIDIA, this is surgical: accelerate roadmap, avoid cultural friction, and control the go‑to‑market effect without absorbing liabilities and payroll overnight.

Investors should translate this into a few concrete expectations. Revenue mix will tilt toward managed inference and cloud instances with higher gross margins; capital intensity on pure chip volume could moderate as more value accrues at the software and delivery layer. Gross margin per inference may climb, even if per‑unit silicon margin compresses, because software and managed service premiums are stickier and invite recurring revenue recognition.

Risk vectors are clear. Licensing can obscure who owns long‑tail maintenance and debugging costs; enterprises might find themselves dependent on vendor timelines for critical fixes. Second, competitors will weaponize openness — offering modular stacks that let customers avoid vendor lock‑in. Third, regulatory scrutiny around dominant platform leverage could focus on whether such licensing arrangements cement an already wide moat into anti‑competitive behavior.

For procurement teams and CIOs, the practical checklist is short and decisive. Reprice TCO around delivered latency and operational SLAs, not raw teraflops. Negotiate explicit porting and maintenance SLAs in any vendor‑delivered inference offering. Model hybrid strategies: retain on‑prem control for mission‑critical, latency‑sensitive workloads while shifting less risky inference to managed offerings that promise capacity guarantees.

NVIDIA’s Groq licensing is an instructive signal about how platform power migrates from metal to memory: from die geometry to orchestration, from chips to capacity. It is also a reminder that the next round of value in AI will accrue less to raw foundry share and more to those who can guarantee predictable, low‑latency inference at scale.

In short: this is an acqui‑hire in the classroom of platforms. It won’t show up as headcount on a balance sheet, but it will show up in enterprise budgets, in chip cycle timing, and in the next boardroom debate over whether you buy silicon or buy the latency guarantee. The smart money will start pricing inference as a delivered capacity with a service premium — and the rest will discover that the path to performance now runs through a vendor’s integration team as much as through its GPU die.

Conclusion: Treat the Groq licensing not as a footnote in NVIDIA’s IP ledger, but as strategic compression — condensing people, paths and performance into a platform that sells predictability. That compression rewires procurement logic and, by extension, the economics of AI inference.

Tags

Related Articles

Sources

NVIDIA and Groq company announcements and press releases; licensing agreement disclosures; enterprise AI procurement analysis; chip industry reporting from The Information, TechCrunch, and semiconductor trade publications.