The Market Inflection Point

Dubbing technology has transformed from stigmatized afterthought to strategic necessity. Netflix now dubs content into over 36 languages, and the platform’s dubbed content consumption increased by more than 120 percent annually in recent years. This shift represents not merely expanded distribution, but a fundamental restructuring of how global audiences access entertainment—and how studios monetize it.

The economic model is compelling. When Netflix relaunched Money Heist with English dubbing after purchasing rights for just two dollars following its Spanish TV cancellation, it became the platform’s most-watched non-English series in 2018. Squid Game, dubbed into over 30 languages, became Netflix’s biggest show ever, demonstrating that low-production-cost foreign content can generate massive returns when properly localized.

The Infrastructure Behind the Boom

Netflix’s approach reveals how technology enables scale. The company invested one billion dollars in content localization in a single year, building distributed infrastructure that connects dubbing studios across continents. Netflix now works with more than 125 facilities worldwide, coordinating translation, voice recording, and audio synchronization through secure cloud platforms.

This infrastructure solves the core problem: converting foreign-language hits into native-feeling experiences across dozens of markets simultaneously. Nearly 50 percent of non-English speaking viewers prefer dubbed versions over subtitled ones, particularly for genres where visual attention matters—action, drama, animation. The preference isn’t marginal; it’s measurable in viewing completion rates and subscription growth.

Foreign Studios: The Hidden Beneficiaries

The dubbing boom creates asymmetric opportunities for non-English production studios. Korean, Spanish, and Brazilian content producers now access global audiences previously blocked by language barriers. Over 40 percent of branded Korean unscripted series viewing on Netflix uses dubbed audio, with Brazil, Mexico, Latin America, and Europe showing strong preferences for dubbing over subtitles.

The financial mathematics favors these producers dramatically. Production costs for foreign content run far below American equivalents—no multi-million dollar budgets required. When dubbing removes language friction, these comparatively low-cost productions generate some of streaming platforms’ biggest hits. The result: foreign studios gain international revenue streams while maintaining cost structures that Hollywood cannot match.

Netflix’s dubbing strategy created entirely new employment categories, including a Director of International Dubbing position and multiple Creative Manager roles across languages. The ecosystem expands beyond platforms to include voice talent networks, localization studios, and technical infrastructure providers—each capturing value from the cross-border content flow.

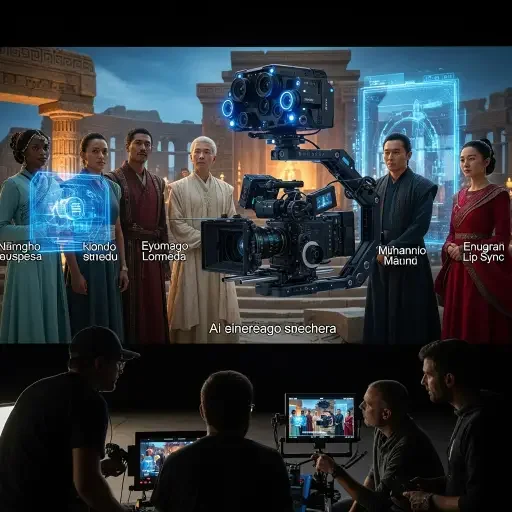

The AI Transformation: From Manual to Algorithmic

Traditional dubbing faces inherent constraints. Voice actors must match timing precisely while preserving emotional tone. Lip movements rarely align across languages—word counts differ, grammatical structures diverge. The process demands skilled translators, experienced voice talent, and frame-by-frame audio editing. Cost per minute ranges from hundreds to thousands of dollars; turnaround time spans weeks or months.

AI lip-sync technology dissolves these constraints through generative models that synchronize mouth movements with translated audio. The technical approach: encode video and audio into latent representations, translate between languages while preserving timing information, then generate visually-aligned output where lip movements match the new dialogue.

Amazon’s December 2024 research introduced an audio-visual speech-to-speech translation framework that improves lip synchronization without altering original visual content, using duration loss to ensure proper timing and synchronization loss to match audio with lip movements. This addresses the key problem—earlier systems modified video to fit audio, creating disjointed experiences and ethical concerns about content integrity.

Current Capabilities and Platforms

The AI dubbing market has matured rapidly. Multiple commercial platforms now offer real-time lip synchronization:

Sync Labs provides API access for real-time lip-syncing across movies, podcasts, games, and animations. Their model processes standard video files without requiring complex 3D avatars, delivering accurate reproductions while preserving video quality.

Rask AI supports translation into 135 languages with voice cloning and automatic lip synchronization. The platform handles videos up to five hours long, processing both dubbed audio and visual alignment automatically.

MuseTalk represents the open-source frontier—a real-time model achieving 30+ frames per second on standard GPU hardware. The system integrates perceptual loss, GAN loss, and sync loss during training, balancing visual quality against lip-sync accuracy through spatio-temporal data sampling.

These tools share common architecture: freeze pre-trained audio and video encoders, train translation layers in latent space, then use diffusion or GAN-based generators to produce synchronized output. The result: automated dubbing that costs fractions of manual approaches while delivering comparable quality.

The Technical Challenge: Perfect Synchronization

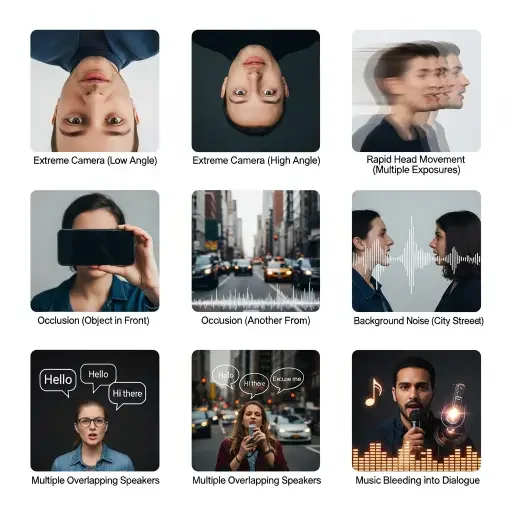

Lip-sync quality depends on multiple factors beyond simple audio-visual alignment. Facial expressions must convey emotional tone accurately. Mouth shapes should match phonemes in the target language. Timing must feel natural—neither rushed nor stretched. Background audio and ambient sound require separate handling to avoid artifacts.

Netflix’s dubbing team conducts workshops on cultural and linguistic nuances, ensuring local expressions are accurately represented and terms like Korean honorifics are localized appropriately while preserving essence. AI systems must encode these cultural dimensions alongside technical synchronization.

The frontier challenge: real-time generation with full emotional synthesis. Current systems handle mouth movements; next-generation models will modulate eyebrows, eye direction, and micro-expressions to match audio emotional content. This approaches “digital human” capability—video that adapts dynamically to any audio input while maintaining perceptual authenticity.

Market Trajectory and Economic Impact

The global film dubbing market reached 4.04 billion dollars in 2024 and projects to 7.63 billion by 2033, exhibiting 7.31 percent annual growth. This understates AI’s impact—traditional dubbing costs constrain market size. As AI reduces per-minute costs by 90+ percent while accelerating turnaround from weeks to hours, addressable market expands dramatically.

The economic logic: dubbing converts single-market content into global product. A Korean drama that cost three million dollars to produce, once dubbed into 30 languages via AI, generates revenue across dozens of territories. The marginal cost of each additional language approaches zero; the revenue multiplier scales linearly.

For streaming platforms, this enables aggressive international expansion. For content creators, it democratizes global distribution—small studios can now compete internationally without Hollywood-scale localization budgets. For viewers, it removes the subtitles-or-nothing choice, expanding access to world cinema.

Implementation Barriers and Solutions

Technical challenges remain. AI models struggle with extreme camera angles, rapid head movements, and occlusions where objects pass in front of faces. Audio quality affects output—background noise, multiple overlapping speakers, and music bleeding into dialogue channels all degrade synchronization accuracy.

Cultural adaptation presents non-technical complexity. Humor doesn’t translate mechanically—jokes require cultural context, wordplay needs localization, and timing must adjust for different comedic sensibilities. AI can synchronize lips but cannot yet autonomously adapt cultural references for maximum impact across markets.

The solution architecture combines AI automation with human oversight. Automated systems handle 90 percent of the work—translation, voice generation, and lip synchronization. Human experts review output, adjust cultural elements, and refine edge cases where AI fails. This hybrid approach captures efficiency gains while maintaining quality control.

The Five-Year Horizon

Three trajectories will define dubbing technology’s near future:

Full emotional synthesis: AI will modulate entire facial expressions, not just mouth movements, to match audio emotional content. Eyes, eyebrows, and subtle muscle movements will align with voice tone, creating perceptually complete performances in any language.

Real-time interactive dubbing: Live video streams will support instant language conversion with synchronized lip movements. Video calls, live broadcasts, and streaming content will translate on-the-fly, eliminating language barriers in real-time communication.

Personalized voice matching: Users will select preferred voice characteristics for dubbed content—age, accent, vocal tone—while maintaining synchronization quality. This extends beyond translation to audio customization, letting viewers optimize their experience across dimensions beyond language alone.

These capabilities exist in research labs today. Commercial deployment within five years seems probable; the economic incentives align, the technical foundations are established, and the market infrastructure is scaling rapidly.

Strategic Implications

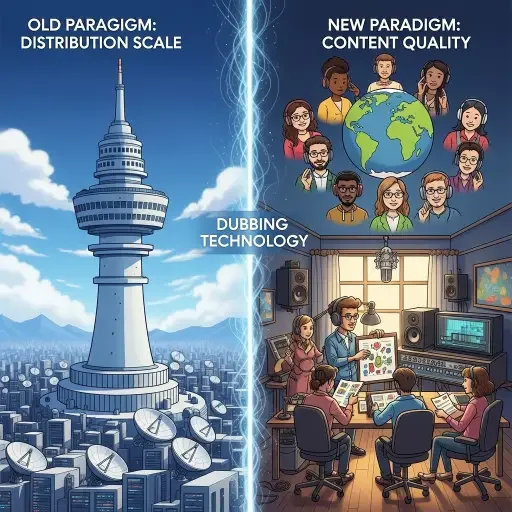

For content producers: dubbing technology shifts competitive advantage from distribution scale to content quality. Small studios can now reach global audiences; the differentiator becomes storytelling capability rather than localization budget.

For platforms: AI dubbing enables aggressive international expansion without proportional cost increases. Netflix’s billion-dollar localization investment, if replaced by AI systems, could process 10x more content at 1/10th the cost—radically expanding content libraries available to non-English subscribers.

For foreign-language studios: this represents the largest market access expansion in entertainment history. Korean, Turkish, Nigerian, and Brazilian producers can monetize globally without Hollywood intermediation. The result: more diverse content flows, more profitable international production, and accelerated growth in non-English entertainment ecosystems.

Conclusion: The Disappearing Barrier

Language has always constrained entertainment distribution. Subtitles solve the problem inadequately—they demand split attention, reduce accessibility, and create cognitive load. Traditional dubbing solved it expensively, limiting which content justified localization investment.

AI lip-sync technology eliminates the constraint entirely. Content can flow frictionlessly across language boundaries, reaching global audiences without perceptual compromise. The economic model shifts from “can we afford to dub this?” to “which 30 languages should we launch in simultaneously?”

This changes entertainment economics fundamentally. When distribution costs approach zero and dubbing costs follow, content quality becomes the sole differentiator. The best stories, regardless of origin language, can now find their global audience. The uncanny valley of mismatched lips and voices closes—and with it, the last major barrier to truly global cinema.

Tags

Related Articles

Sources

Research based on Netflix localization data, Amazon AI research (Dec 2024), commercial dubbing platform specifications (Sync Labs, Rask AI, MuseTalk), market analysis from film dubbing industry reports (2024-2033), and streaming platform viewership statistics for foreign content.