The Cold Arithmetic of Orbit

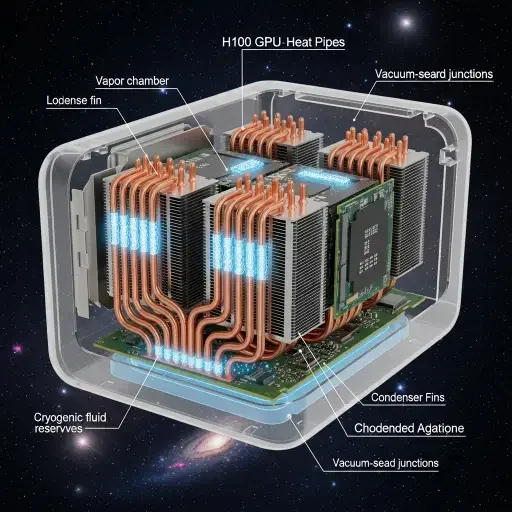

Here's what makes this improbable: an H100 GPU dissipates roughly 700 watts of heat. On Earth, that's a solvable problem—fans, liquid cooling, air circulation. In space, convection doesn't exist. Heat has nowhere to go except through radiation, which is roughly 100 times less efficient. Aethero's satellite had to radiate all that thermal energy into the void while maintaining operational temperatures between -40°C and 85°C. They solved it with heat pipes, radiators, and algorithmic throttling—a ballet of thermal engineering that makes the GPU's computational output almost secondary.

Almost. Because the real story isn't that they kept the chip cool. It's what running inference in orbit unlocks.

Why Intelligence Belongs in Space

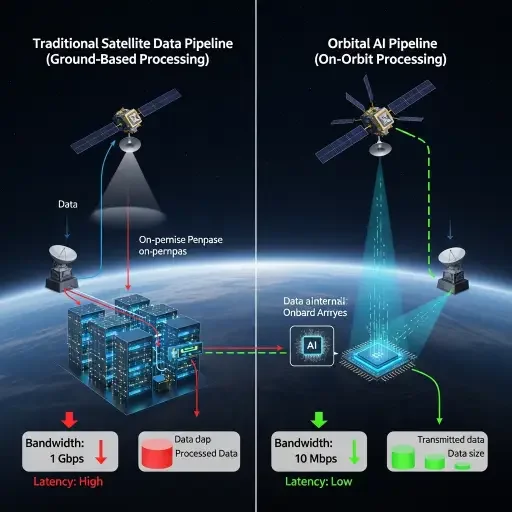

Consider the latency problem. A satellite imaging Earth from 500 kilometers altitude generates terabytes of data daily—synthetic aperture radar sweeps, hyperspectral imaging, real-time video. Currently, most of that data gets downlinked to ground stations for processing, a bottleneck constrained by radio bandwidth and orbital mechanics. A satellite might pass over a ground station for 10 minutes per orbit. During that window, it can transmit perhaps 100 gigabytes. The rest queues.

Now place an H100 on the satellite. Inference happens in orbit—object detection, change analysis, anomaly identification. Instead of downlinking raw pixels, the satellite transmits insights: coordinates, classifications, alerts. The data compression ratio shifts from 1:1 to potentially 1000:1. Latency collapses from hours to seconds.

This isn't theoretical optimization. It's structural transformation. When processing moves to the point of collection, the entire information architecture inverts. The satellite stops being a passive sensor and becomes an autonomous analytical node—a piece of distributed intelligence infrastructure floating at Mach 23.

The Economics of Orbital Compute

Space-grade components cost approximately 10 to 100 times their terrestrial equivalents. Radiation hardening, thermal qualification, launch certification—each adds zeros. An H100 retails around $30,000. By the time it's space-qualified and integrated, the unit cost approaches $500,000 to $1 million. Add launch costs—currently $3,000 to $5,000 per kilogram to low Earth orbit—and the H100's 3-kilogram mass translates to another $9,000 to $15,000.

Yet orbital real estate has unique value propositions. A satellite constellation in sun-synchronous orbit maintains consistent lighting conditions—critical for consistent imaging. Revisit times compress; a properly configured constellation can image any point on Earth every 10 minutes. Ground infrastructure becomes irrelevant; the compute orbits where the data originates.

The calculus shifts when you model where intelligence needs to be. If edge AI's value is minimizing latency and bandwidth, orbit is the ultimate edge—physically closest to global data sources, unconstrained by terrestrial infrastructure, perpetually available. The infrastructure costs get amortized across mission duration—typically 5 to 7 years for modern satellites.

The Technical Gauntlet

Radiation remains the primary adversary. Cosmic rays flip bits in silicon. A single event upset can crash a process; accumulated damage degrades chips over time. NVIDIA's space-grade solutions include error-correcting code memory, redundant processing paths, and strategic shielding. But shielding adds mass, and mass is the enemy of launch economics.

Aethero's approach combines selective shielding with algorithmic resilience. Critical memory regions get tantalum shielding; less critical components rely on error detection and rollback. The satellite runs continuous self-diagnostics, comparing outputs across redundant compute paths. When radiation-induced errors occur—and they will—the system catches them before they cascade.

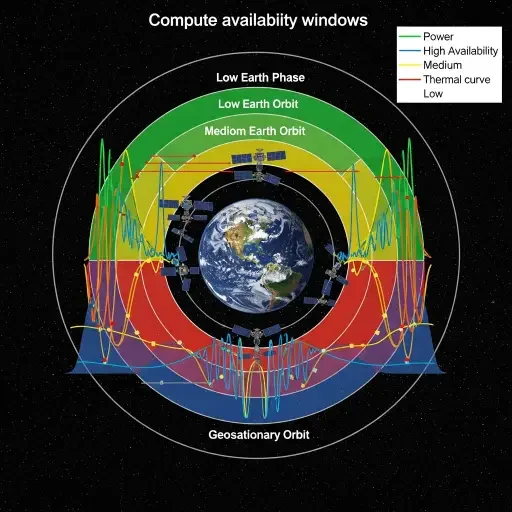

Power budget is the second constraint. Solar panels generate roughly 200 to 300 watts per square meter in orbit. An H100 demanding 700 watts must either throttle or operate intermittently. Aethero's satellite dynamically scales compute intensity based on solar input, battery state, and thermal headroom. During optimal orbital positions—maximum sun exposure, cool Earth shadow for radiator efficiency—the H100 runs full throttle. During less favorable geometry, it idles.

This creates an interesting inversion: orbital AI isn't uniformly available. It pulses with orbital mechanics, creating natural rhythms of compute availability. Applications must adapt to this temporal structure—a design constraint that doesn't exist in terrestrial data centers.

What This Enables, Specifically

Autonomous navigation. A satellite constellation monitoring maritime traffic can identify suspicious vessel behavior—dark ships disabling transponders, unusual clustering, anomalous routes—without transmitting terabytes of AIS data to ground stations. The decision loop compresses from hours to minutes.

Real-time disaster response. Satellites detecting wildfires or floods can run damage assessment models in orbit, immediately routing actionable intelligence to emergency services. The time between event detection and response initiation collapses.

Hyperspectral analysis. Current satellites capture hundreds of spectral bands but lack onboard processing for sophisticated analysis. With orbital AI, they can identify specific mineral signatures, vegetation health indices, or atmospheric chemical compositions in real-time, transmitting only the analyzed results.

Secure processing. Certain governmental and defense applications require data never touch foreign ground stations. Orbital processing ensures intelligence analysis occurs within sovereign space infrastructure—a geopolitical capability previously impossible.

The Trajectory Ahead

SpaceX's Starlink constellation currently comprises over 5,000 satellites. Imagine if each carried modest AI accelerators—not H100s, but purpose-built inference chips. The aggregate compute capacity would rival terrestrial data centers, distributed across a global mesh with inherent low-latency coverage.

Amazon's Project Kuiper plans 3,200 satellites. OneWeb targets 648. China's Guo Wang constellation will deploy 13,000. Each represents potential orbital compute nodes. The infrastructure is already being built. The question is whether it gets instrumented for intelligence processing.

Aethero's H100 satellite is a demonstration, not a product. But demonstrations establish feasibility boundaries. They prove what's possible before it becomes economically optimal. The Wright Flyer flew 120 feet. Forty years later, we had transatlantic passenger service.

The Recursive Compression

Intelligence infrastructure is migrating to where intelligence is needed. For decades, we centralized compute—mainframes, data centers, cloud regions—because centralization enabled economies of scale. Edge computing began reversing that logic: processing at the periphery reduces latency and bandwidth. Orbital AI is edge computing's logical extreme—processing not at the edge of the network, but at the edge of the atmosphere, where data originates.

Think of it as gravity, but for information. Data has mass; moving it costs energy. Processing orbits toward data sources, not away from them. Aethero's satellite demonstrated this principle at 17,500 mph. The H100 in orbit is less a technical curiosity than an architectural inevitability—the first visible crossing of a threshold we've been approaching since the first edge device ran its first neural network.

The principle: intelligence belongs where decisions get made. Sometimes that's a data center. Sometimes it's a smartphone. And sometimes, it's 500 kilometers above the Pacific, racing through the thermosphere at Mach 23, processing terabytes while radiating waste heat into the cosmic microwave background.

The beginning, as promised, is orbital. What follows is atmospheric in scope.

Sources

Industry announcements from Aethero, NVIDIA space computing initiatives, orbital computing infrastructure reports