Market sentiment has undergone a visible recalibration. The S&P 500’s recent volatility—what analysts termed “rattled” rather than panicked—followed Alphabet’s disclosure that it would spend $185 billion on infrastructure in 2026, more than doubling its 2025 outlay. The announcement crystallized a question investors had been avoiding: when does visionary investment become irrational exuberance?

The spending trajectory reveals the inflection point. Through Q3 2025, hyperscalers collectively deployed $106 billion in a single quarter. That pace continued through year-end, bringing total 2025 capex to approximately $330 billion. The 2026 projection of $660 billion represents a 100% increase year-over-year—but the composition changed fundamentally. For the first time, internal cash flow cannot sustain the buildout. This marks the transition from self-funded expansion to leverage-dependent growth.

From Cash-Funded to Debt-Dependent

Hyperscalers raised $108 billion in debt markets during 2025. Projections suggest $1.5 trillion in additional borrowing will be required through 2027. This marks a categorical shift for companies that built their dominance on capital-light business models with operating margins above 30%. The transition introduces interest rate sensitivity, covenant restrictions, and refinancing risk that fundamentally alter the investment profile these companies present to markets.

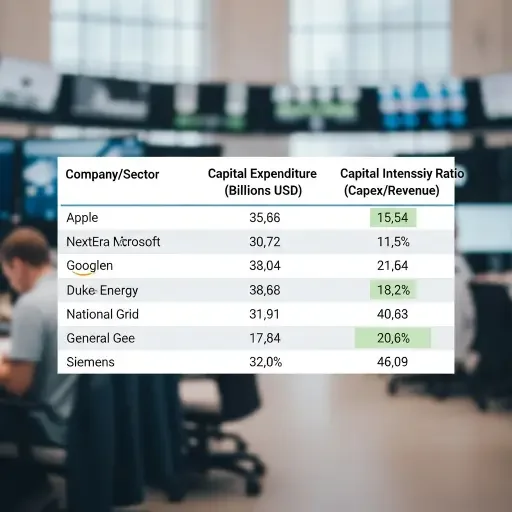

Capital intensity ratios now place Big Tech in unfamiliar territory. Alphabet’s capex-to-revenue ratio reached 57% in its latest quarter. Amazon and Microsoft hover between 45-52%. These figures exceed historical norms for technology companies and approach levels typically associated with utilities or heavy industrials—sectors that operate under entirely different regulatory and competitive dynamics.

During the late 1990s telecom bubble, capital intensity among major carriers peaked at 35-40% before the sector collapsed under the weight of overbuilt infrastructure and insufficient demand. The current AI infrastructure buildout has already exceeded that threshold. The shift from asset-light software businesses to infrastructure-intensive operations represents a structural transformation with uncertain return profiles. Software-as-a-service businesses historically achieved 80-90% gross margins precisely because they avoided physical infrastructure. The hyperscaler bet is that AI compute infrastructure will generate similar economics at scale—but this remains unproven at current deployment rates.

The Algorithmic Efficiency Challenge

The DeepSeek development introduced a competing variable into investor models that cannot be ignored. When a Chinese research lab demonstrated comparable performance to leading Western AI systems at approximately 1/27th the training cost, it exposed a critical question: does the $660 billion buildout reflect technological necessity or architectural path dependence?

The market’s reaction suggested sudden recognition that algorithmic efficiency might substitute for hardware scale—undermining the premise that massive compute spending is the only viable approach. If model performance can be achieved through optimization rather than scale, the entire economics of the infrastructure buildout change. Training costs drop from $100 million per frontier model to under $5 million. Inference costs fall proportionally. This introduces genuine uncertainty about whether hyperscalers are building capacity for a compute-intensive paradigm that may be superseded by efficiency-oriented approaches before the infrastructure generates adequate returns.

The analogy is imperfect, but instructive: mobile network operators built extensive 3G infrastructure just as WiFi offloading and software-based optimization reduced data network requirements. The equipment was deployed, the capital was spent, but utilization never reached projected levels. The result was stranded assets and years of depressed returns.

The Enterprise Adoption Gap

Enterprise adoption timelines complicate the ROI calculation in ways that consumer internet never did. While consumer-facing AI applications have scaled rapidly—ChatGPT reached 100 million users in two months—enterprise deployments remain predominantly in experimentation phases. Current GPU utilization across hyperscaler AI infrastructure averages 40-60%—substantially below the 80-90% thresholds required to justify cost per compute hour.

The transition from pilot projects to production workloads has not materialized at the pace the infrastructure buildout assumes. Unlike consumer internet services that monetize through advertising at scale, enterprise AI requires direct customer payment for compute resources. The willingness-to-pay curve remains uncertain. Infrastructure deployed in 2026 carries immediate capital costs and debt service obligations. Revenue realization depends on enterprise customers transitioning from experimentation budgets—typically capped at $100K-$500K annually per customer—to production spending that could reach $5M-$50M+ annually. That transition timeline appears to be extending rather than compressing, creating temporal mismatch between capital deployment and revenue generation.

Physical Constraints Meet Financial Reality

Data center power requirements are projected to increase 50% by 2027 and 165% by 2030. Nvidia CEO Jensen Huang characterized the AI infrastructure buildout as “the largest in human history”—but unlike previous technology booms, this one cannot be accelerated purely through capital deployment. Utility infrastructure, permitting timelines, and grid capacity create hard limits that no amount of spending can immediately overcome.

Several hyperscaler data center projects have experienced 18-24 month delays due to power availability constraints. The infrastructure can be financed and equipment can be ordered, but transformers, substations, and transmission lines operate on physical timelines that capital cannot compress. This creates a scenario where hundreds of billions in AI hardware sits partially idle waiting for power infrastructure to catch up—capital deployed but not productive, generating debt service obligations without corresponding revenue.

The bond market’s absorption capacity represents another constraint. Corporate debt issuance of the scale required—$1.5 trillion across a concentrated group of tech issuers—will test credit markets’ ability to price and distribute risk. Credit rating agencies have begun flagging leverage metrics. While hyperscalers maintain investment-grade ratings, the trajectory toward 2.5-3.5x net debt-to-EBITDA ratios approaches thresholds that historically trigger downgrades in other sectors. The debt is being issued at floating rates in many cases, creating exposure to interest rate volatility that compounds infrastructure cost uncertainty.

The Historical Context Problem

At 1.9% of GDP, the AI capex surge approaches the combined scale of the Interstate Highway System, Apollo Program, and rural electrification expansion—compressed into a two-year window. Those precedents, however, involved public coordination and had decades-long payback horizons. The Interstate Highway System took 35 years to complete and generated returns through economic efficiency gains that accrued broadly across the economy. The Apollo Program was a national priority with no expectation of direct financial return.

Private-sector investors typically demand 3-5 year return cycles, creating temporal tension with infrastructure that may require 7-10 years to fully monetize. This mismatch between deployment speed and revenue realization timelines creates vulnerability to any shift in market sentiment or competitive dynamics. The question facing investors is whether $660 billion represents prescient infrastructure investment that will define the next decade of computing, or the foundation of a stranded asset problem on a scale the technology sector has never experienced.

Nvidia’s upcoming earnings will serve as a critical test. Demand sustainability for high-end GPUs will signal whether the infrastructure buildout continues at projected rates or moderates. Order backlog and forward guidance will reveal whether hyperscaler capex projections reflect firm commitments or aspirational targets subject to revision based on enterprise adoption rates and competing technical approaches.

The reassessment underway reflects a delayed recognition that capital availability alone does not guarantee rational allocation. When the cost-effectiveness of a fundamental approach faces competitive pressure—whether from algorithmic innovation or alternative architectures—the entire investment thesis requires reassessment. The question is no longer whether to fund the AI buildout, but whether the current scale and speed reflect technical requirements or momentum-driven overcapitalization driven by fear of being left behind in what may be the defining technology transition of the decade.

Related Articles

Sources

Alphabet Q4 2025 earnings call and investor presentation, hyperscaler capex data from Meta/Amazon/Microsoft/Google quarterly filings, DeepSeek technical papers and performance analysis, utility sector power demand forecasts, corporate debt issuance data from Bloomberg, historical infrastructure spending comparisons adjusted for GDP